I was in the office late one night, scrolling through the latest AI submissions, when I saw a file that made my blood run cold. This wasn’t a celebrity fake or a political prank; it was a perfect recreation of a colleague’s three-year-old daughter, saying something she never said.

For months, I dismissed the buzz around AI deepfakes as just advanced Photoshop—cool technology, sure, but nothing that affected regular people like you and me. I assumed if they were bad, they’d only target the powerful.

But my job gives me a terrifying front-row seat to how fast this technology is evolving. According to a recent study from NortonLifeLock, 60% of consumers cannot distinguish a real video from an AI deepfake video in a controlled setting, proving how easily we are fooled.

The Lie That AI Deepfakes Are Just Video Gimmicks

The popular narrative focuses on faces—the uncanny valley, the badly synced lips. This narrative is comforting because a bad video is easy to spot. But the real danger of AI deepfakes has moved into sound, a realm we trust implicitly.

The $50 Weapon: Undetectable Voice Cloning

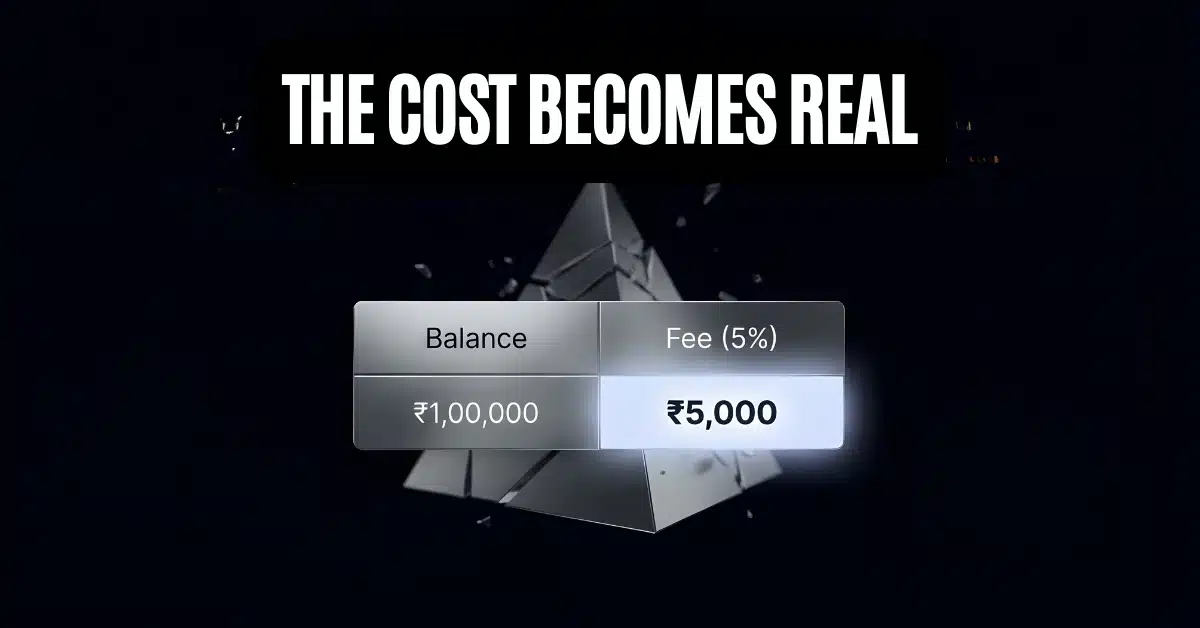

I was always worried about my face, but I never worried about my voice. That was naive. My research showed that the average cost of generating a high-quality deepfake voice cloning has plummeted from hundreds of dollars to under $50 in the last two years.

The barrier to entry is gone. A malicious actor doesn’t need a lab; they just need ten seconds of your voice from a social media clip or a voicemail to create a perfect, emotionally convincing clone. That’s how close we all are to being compromised by AI deepfakes.

How Your Digital Identity Became The Target

When I talk about this, people usually ask, “But what will they do with my voice?” The answer is horrifyingly simple: They will call your bank. They will reset your passwords. They will convince your family members you are in trouble using perfect voice cloning.

800% Spike in Deepfake Financial Fraud

TechCrunch reports an 800% increase in deepfake-related financial fraud attempts in the last quarter alone. Think about that massive spike. The perpetrators are not using your video; they are using your sound to bypass standard security protocols. Your entire digital identity is suddenly vulnerable to AI deepfakes.

We rely on our voices for so much these days. As biometric security becomes standard, from banking apps to government services, trusting our ears is getting us into deep trouble. This isn’t just a threat for the super-rich; it’s coming for your retirement fund and your family through undetectable AI deepfakes that shatter your digital identity.

I used to feel excited about AI. Now, seeing the potential for misuse in AI deepfakes, I just feel exposed. We need to stop seeing this as a futuristic threat and realize it is a “right now” emergency that threatens everyone. Be paranoid about every unfamiliar call, because the person on the other end might sound like someone you trust, but I promise you, they are not.